Welcome to Young Money! If you’re new here, you can join the tens of thousands of subscribers receiving my essays each week by adding your email below.

Yesterday, I read a New Yorker piece critiquing a hot new app called “Blinkist.” Maybe you’ve heard of it. If you haven’t, here’s an overview from the article:

Blinkist is an app. If I had to summarize what it does, I would say that it summarizes like crazy. It takes an existing book and crunches it down to a series of what are called Blinks. On average, these amount to around two thousand words.

For context, the average adult novel is ~90,000 words, meaning that Blinkist has effectively turned books into blog posts. And, if reading the already-condensed “book” is too much of a hassle, you can listen to a 2,000-word audio summary instead.

The whole thing feels dystopian. It’s like Blinkist’s founders looked at literature and said, “How do we remove the ‘reading’ component from reading? What if you could just get to the point?” It’s no different than googling a summary of Game of Thrones instead of watching the show, and basking in the knowledge gained from your Wikipedia skim. Is this more efficient? I guess? But is efficiency worth it? Probably not. The point of reading isn’t just to “get to the point,” after all.

Unfortunately, generative AI is pushing much of the internet in this same direction.

Since the release of ChatGPT, nine different news organizations and publishers have signed licensing deals with OpenAI. Many of these deals grant the AI giant access to the publishers’ paywalled archives to train its large language models (LLMs), and some of the deals, such as one with The Financial Times, will allow ChatGPT users to see “select attributed summaries, quotes and links to FT journalism in response to relevant queries.“

Long-form journalism is incredibly valuable for LLMs, and for a few million dollars and the occasional attribution link, publishers gave OpenAI exactly what they wanted: thousands (or more) of archived and new articles to train its models on.

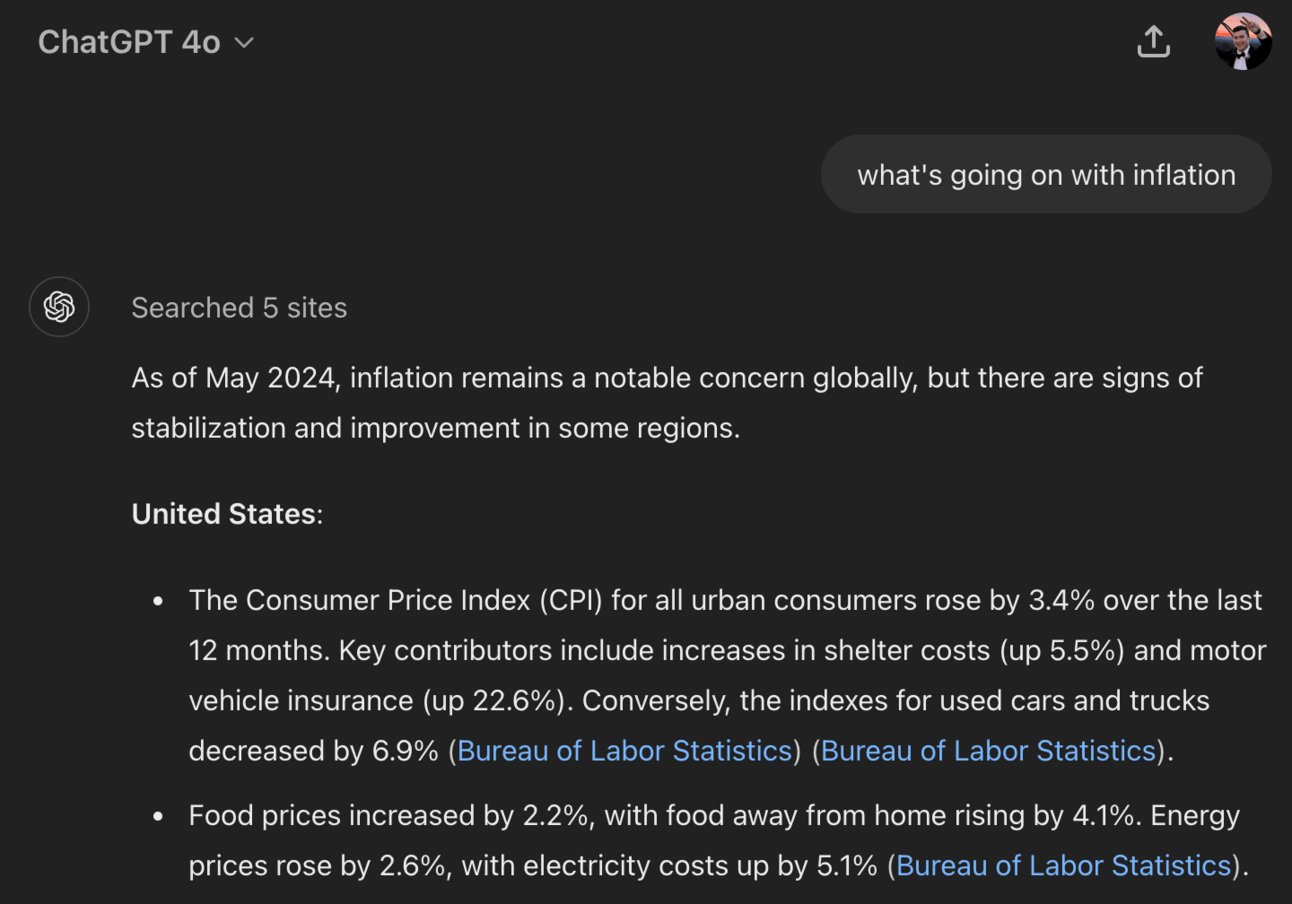

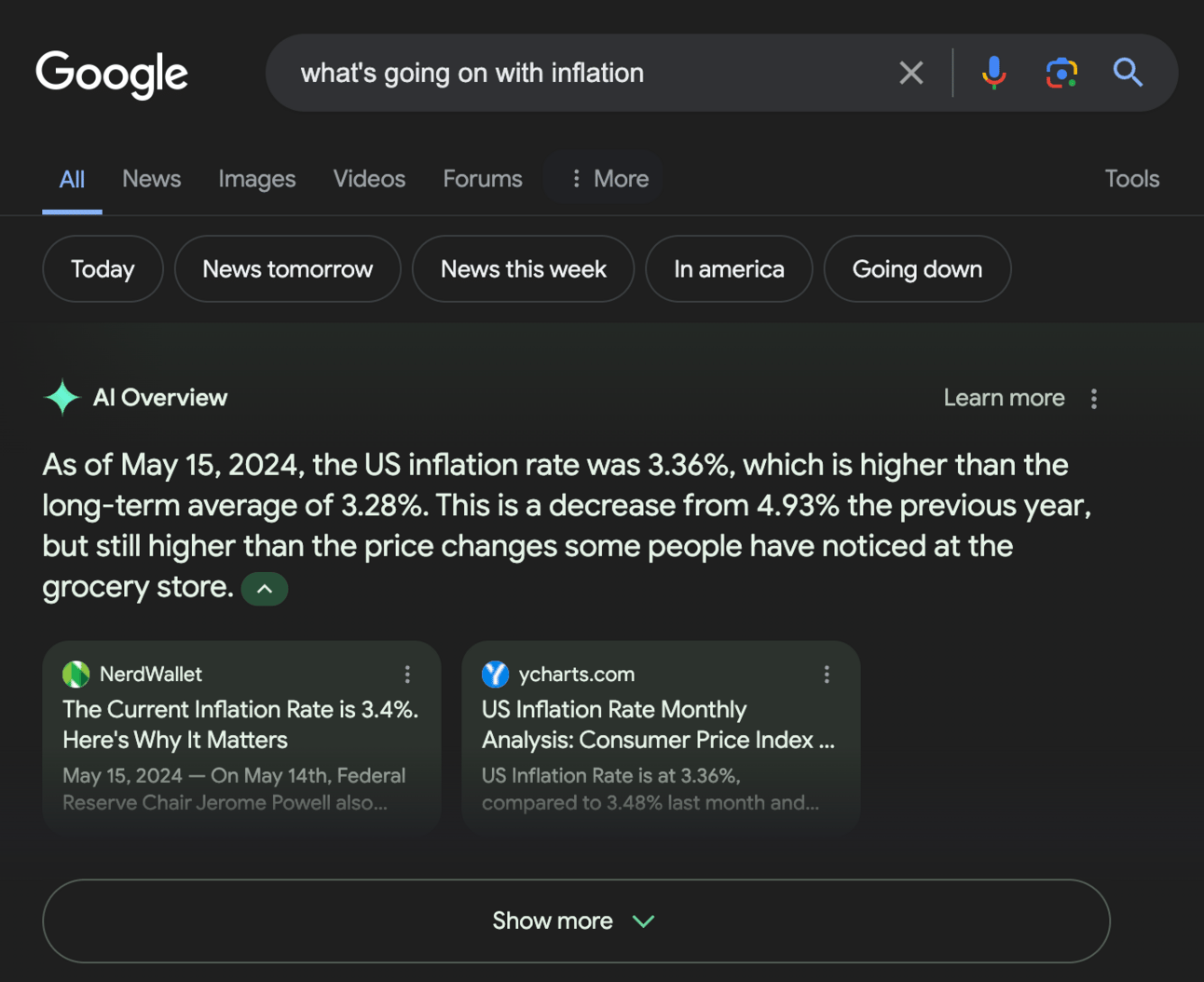

Why do generative AI companies want so much publisher data? My guess is to eventually displace the publishers themselves. Generative AI platforms provide answers to user queries based on their training data. Access to archived and real-time articles lets them (you guessed it) provide more detailed responses, becoming psuedo-search engines in the process. Take this ChatGPT prompt asking about inflation, for example:

Okay, sure, the answer links to different sources, but how many people are going to click a link? Few, if any. And now, mimicking ChatGPT, Google is beginning to share AI-generated responses above its normal search responses as well.

I don’t understand why publishers are signing licensing deals with generative AI companies that will likely steal their traffic. OpenAI pays you a multi-million dollar licensing fee, they publish (potentially inaccurate) summaries of your journalists’ work in their results (with attribution links, of course), users skim those results, don’t bother with going to your site, and continue with their day.

It feels like a broken internet.

From a user standpoint, using ChatGPT as a fact finder for current news seems efficient, especially if it can summarize current events. Getting a breakdown of the US inflation situation, for example, is useful. But there’s no guarantee that the provided summaries are accurate (LLMs match patterns, they aren’t search engines), and in the longer term, if users come to rely on generative AI companies instead of the underlying media sources, the system stops working.

ChatGPT needs articles from publications to generate accurate answers, those publications need paying users to operate, and many of those users may opt out of their subscriptions (or, for free sites, ad revenue may plummet from loss of traffic) if they can get their answers from ChatGPT. When too many readers stop visiting media sites, the system breaks down.

For publishers who focus on long-form commentary over breaking news, these deals make even less sense. The Atlantic, which just a week ago published an excellent op-ed from The Information’s Jessica Lessin on the dangers of partnering with AI platforms, announced its own deal with OpenAI a few days ago, further empowering the company’s summarization machine.

Generative AI has, in many instances, been a fun and useful tool, but I just don’t see how it can integrate within the media ecosystem without breaking it along the way. And, beyond that, an internet driven by large language model summarizations just feels bleak, no? Do we seriously want a one-dimensional internet where we rely on a central aggregator for everything?

- Jack

I appreciate reader feedback, so if you enjoyed today’s piece, let me know with a like or comment at the bottom of this page!

Young Money is now an ad-free, reader-supported publication. This structure has created a better experience for both the reader and the writer, and it allows me to focus on producing good work instead of managing ad placements. In addition to helping support my newsletter, paid subscribers get access to additional content, including Q&As, book reviews, and more. If you’re a long-time reader who would like to further support Young Money, you can do so by clicking below. Thanks!

Jack's Picks

This conversation between Sam Parr and Ex-Navy SEAL-turned author Jack Carr was fantastic.

Check out Morgan Housel’s latest blog on redefining “good work.”

If you didn’t click the link above, here is Jessica Lessin’s piece on the problems with these AI partnerships.